Active Inference for Autonomous Navigation: A Framework for Escaping Local Optima

Author

Richard Goodman

Date Published

Abstract

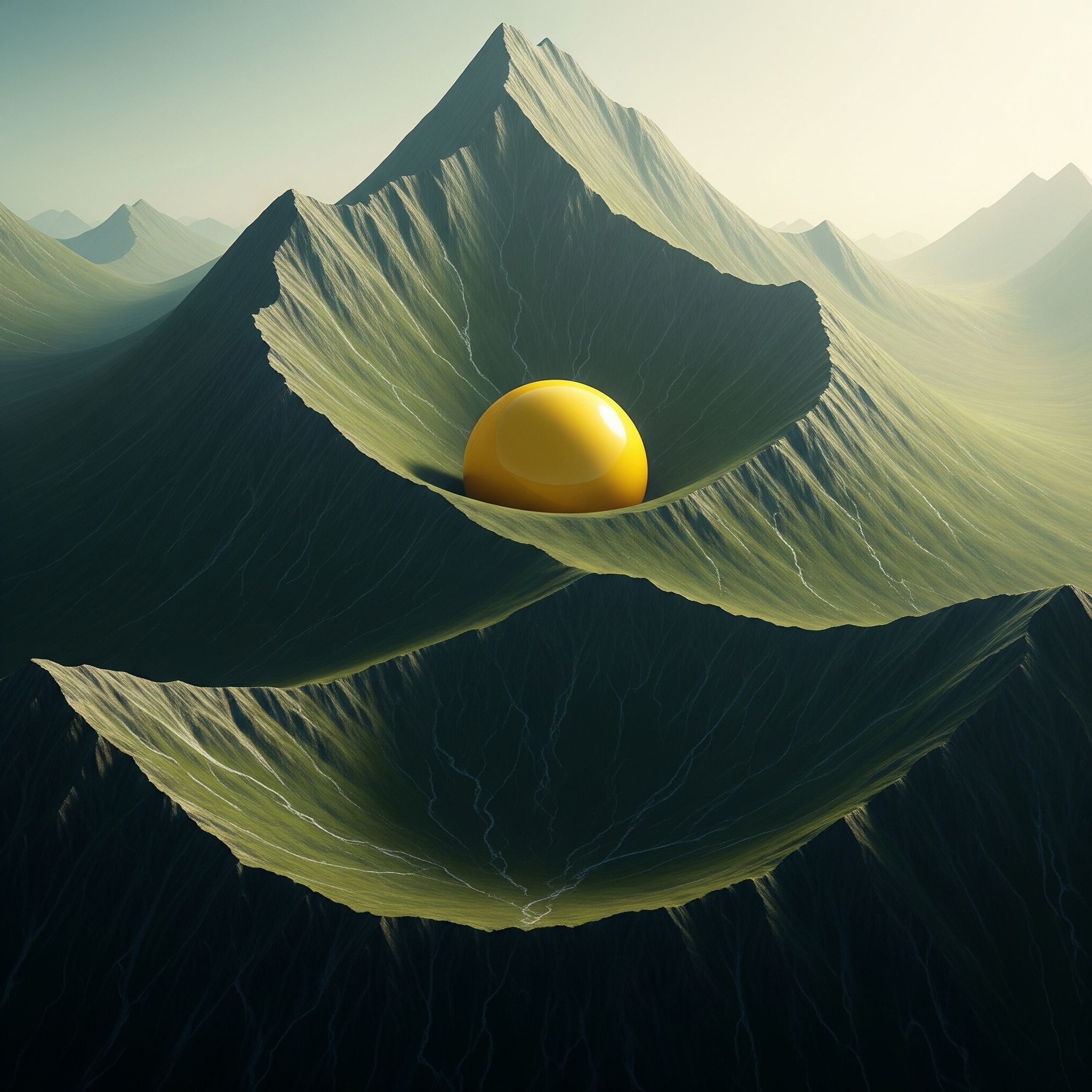

Standard optimization algorithms, such as gradient descent and evolutionary methods, are often constrained by their susceptibility to local optima. To address this, we investigate Active Inference, a first-principles framework derived from the Free Energy Principle, as a more robust method for state-space search and decision-making under uncertainty. We implement a computational agent in a generic, partially observable grid-world environment, where action selection is driven by the imperative to minimize expected free energy. Initial results demonstrate that the agent exhibits a clear preference for epistemic actions that resolve environmental uncertainty, even at the temporary cost of immediate progress toward a specified goal. This emergent information-seeking behavior suggests that Active Inference provides a powerful mechanism for developing autonomous systems that can effectively balance exploration and exploitation, thereby enabling more sophisticated and efficient navigation of complex problem landscapes.

GitHub Repo : https://github.com/Abraxas1010/free_energy_principle

Research Gate: https://www.researchgate.net/publication/392501413_Active_Inference_for_Autonomous_Navigation_A_Framework_for_Escaping_Local_Optima

Introduction/Background

A primary challenge in engineering autonomous systems is creating agents capable of robust decision-making in novel and uncertain situations. Conventional approaches, including many reinforcement learning algorithms, often model action selection as an optimization problem, seeking to maximize a reward function. However, these methods can be brittle, failing when an agent becomes trapped in a local optimum—a solution that is superior to its immediate neighbors but globally suboptimal. This limitation is particularly evident in sparse-reward or partially observable environments where the path to the optimal goal is not immediately apparent.

The Free Energy Principle (FEP) offers a unifying theory of brain function that recasts intelligent behavior not as reward maximization, but as a process of minimizing surprise (or maximizing model evidence). Agents governed by this principle, a process known as Active Inference, maintain an internal generative model of their world. Perception and action are two sides of the same coin used to ensure the agent's model remains an accurate, or unsurprising, account of its sensory inputs. Perception minimizes surprise by updating the model's beliefs to align with sensations, while action minimizes surprise by changing the world to make it conform to the model's predictions.

Methodology

To investigate the practical utility of this framework for a client, we developed an agent in a 5x5 partially observable grid-world. The environment contained a start state, a goal state, and static, unknown obstacles. The agent’s task was to navigate from start to goal. This generic setup served as our initial step in validating the approach.

The agent's action selection is governed by the minimization of Expected Free Energy (EFE), which decomposes into two key components:

- Instrumental Value: This component drives the agent toward its preferred states, which in this case corresponds to the goal location. It quantifies the agent’s belief that a given sequence of actions will lead to the desired outcome.

- Epistemic Value: This component promotes actions that resolve uncertainty about the environment. It quantifies the information gain expected from a sequence of actions, compelling the agent to explore.

By selecting actions that minimize the sum of these two terms (or maximize their positive formulation), the agent inherently balances the competing demands of exploitation (reaching the goal) and exploration (finding out about the world). This stands in contrast to many traditional algorithms where this balance must be manually tuned with heuristics like epsilon-greedy strategies.

Results

The simulation demonstrated that the agent successfully navigated the environment to the goal. Critically, the agent’s trajectory was not the most direct path. Instead, the agent exhibited a clear preference for actions that resolved uncertainty about the environment, even at the cost of immediate progress toward the goal. For example, the agent would first move towards an ambiguous area to ascertain whether it contained an obstacle before committing to a path.

This information-seeking behavior was not explicitly programmed; it emerged naturally from the single imperative of minimizing EFE. The agent deviated from the shortest path to first build a more accurate internal model of its world, thereby avoiding potential dead-ends that might have trapped a less "curious" agent.

Discussion & Conclusion

Our results confirm the central hypothesis that an agent driven by Active Inference can effectively navigate a partially observable environment. The agent’s emergent information-seeking behavior enables a more sophisticated search of the state space, reducing the risk of settling into local optima. This work aligns with a growing body of research demonstrating the power of Active Inference for creating flexible and adaptive agents.

For commercial applications, such as those being developed by firms like Versus AI, these principles are critical for moving beyond the limitations of standard optimization. By creating systems that actively seek to understand their operational environment, we can build more robust solutions for complex problems in supply chain logistics, autonomous robotics, and network optimization. This initial work validates the FEP as a promising framework for engineering the next generation of intelligent systems for the client.

References

[1] Friston, K. (2010). The free-energy principle: a unified brain theory? Nature Reviews Neuroscience, 11(2), 127–138.